Mathematical foundations of the theory of test design. Theoretical foundations of testing

Basic concepts of test theory.

A measurement or test taken to determine an athlete's condition or ability is called a test. Any test involves measurement. But not every change serves as a test. The measurement or test procedure is called testing.

A test based on motor tasks is called motor. There are three groups of motor tests:

- 1. Control exercises, in which the athlete is tasked to show maximum results.

- 2. Standard functional tests, during which the task, the same for everyone, is dosed either according to the amount of work performed, or according to the magnitude of physiological changes.

- 3. Maximum functional tests, during which the athlete must show maximum results.

High quality testing requires knowledge of measurement theory.

Basic concepts of measurement theory.

Measurement is the identification of correspondence between the phenomenon being studied, on the one hand, and numbers, on the other.

The fundamentals of measurement theory are three concepts: measurement scales, units of measurement and measurement accuracy.

Measurement scales.

A measurement scale is a law by which a numerical value is assigned to a measured result as it increases or decreases. Let's look at some of the scales used in sports.

Name scale (nominal scale).

This is the simplest of all scales. In it, numbers act as labels and serve to detect and distinguish objects under study (for example, the numbering of players on a football team). The numbers that make up the naming scale are allowed to be changed by metas. In this scale there are no relations like “ more-- less”, so some believe that the use of a naming scale should not be considered a measurement. When using a scale, names, only some mathematical operations can be carried out. For example, its numbers cannot be added or subtracted, but you can count how many times (how often) a particular number appears.

Order scale.

There are sports where the athlete’s result is determined only by the place taken in the competition (for example, martial arts). After such competitions, it is clear which of the athletes is stronger and which is weaker. But how much stronger or weaker it is impossible to say. If three athletes took first, second and third places, respectively, then what the difference in their sportsmanship is remains unclear: the second athlete may be almost equal to the first, or may be weaker than him and be almost identical to the third. The places occupied in the order scale are called ranks, and the scale itself is called rank or non-metric. In such a scale, its constituent numbers are ordered by rank (i.e., occupied places), but the intervals between them cannot be accurately measured. Unlike the naming scale, the order scale allows not only to establish the fact of equality or inequality of measured objects, but also to determine the nature of inequality in the form of judgments: “more is less,” “better is worse,” etc.

Using order scales, you can measure qualitative indicators that do not have a strict quantitative measure. These scales are used especially widely in the humanities: pedagogy, psychology, sociology.

Order scales can be applied to ranks larger number mathematical operations than to the numbers of the naming scale.

Interval scale.

This is a scale in which numbers are not only ordered by rank, but also separated by certain intervals. The feature that distinguishes it from the ratio scale described below is that the zero point is chosen arbitrarily. Examples can be calendar time (the beginning of chronology in different calendars was set for random reasons), joint angle (the angle at the elbow joint with full extension of the forearm can be taken equal to either zero or 180°), temperature, potential energy lifted load, potential electric field etc.

The results of measurements on an interval scale can be processed by all mathematical methods, except for calculating ratios. These interval scales provide an answer to the question: “how much more,” but do not allow us to state that one value of a measured quantity is so many times more or less than another. For example, if the temperature increased from 10 to 20 C, then it cannot be said that it has become twice as warm.

Relationship scale.

This scale differs from the interval scale only in that it strictly defines the position of the zero point. Thanks to this, the ratio scale does not impose any restrictions on the mathematical apparatus used to process observational results.

In sports, ratio scales measure distance, strength, speed, and dozens of other variables. The ratio scale also measures those quantities that are formed as differences between numbers measured on the interval scale. Thus, calendar time is counted on a scale of intervals, and time intervals - on a scale of ratios. When using a ratio scale (and only in this case!), the measurement of any quantity is reduced to the experimental determination of the ratio of this quantity to another similar one, taken as a unit. By measuring the length of the jump, we find out how many times this length is longer another body taken as a unit of length (a meter ruler in a particular case); By weighing a barbell, we determine the ratio of its mass to the mass of another body - a single “kilogram” weight, etc. If we limit ourselves only to the use of ratio scales, then we can give another (narrower, more specific) definition of measurement: to measure a quantity means to find experimentally its relation to the corresponding unit of measurement.

Units of measurement.

To results different dimensions could be compared with each other, they must be expressed in the same units. In 1960, the International General Conference on Weights and Measures adopted International system units, abbreviated as SI (from the initial letters of the words System International). Currently, the preferred application of this system in all fields of science and technology has been established, in national economy, as well as when teaching.

The SI currently includes seven basic units independent from each other (see table 2.1.)

Table 1.1.

From the indicated basic units, the units of other physical quantities are derived as derivatives. Derived units are determined on the basis of formulas that relate to each other physical quantities. For example, the unit of length (meter) and the unit of time (second) are basic units, and the unit of speed (meter per second) is a derivative.

In addition to the basic ones, the SI distinguishes two additional units: the radian, a unit of plane angle, and the steradian, a unit of solid angle (angle in space).

Measurement accuracy.

No measurement can be made absolutely accurately. The measurement result inevitably contains an error, the magnitude of which is smaller, the more accurate the measurement method and meter. For example, using a regular ruler with millimeter divisions, it is impossible to measure length with an accuracy of 0.01 mm.

Basic and additional error.

Basic error is the error of the measurement method or measuring device, which occurs in normal conditions their applications.

Additional error is the error of a measuring device caused by deviation of its operating conditions from normal ones. It is clear that devices designed to operate at room temperature will not give accurate readings, if you use it in the summer at the stadium under the scorching sun or in the winter in the cold. Measurement errors may occur when the voltage electrical network or battery power supply is below normal or inconsistent in value.

Absolute and relative errors.

The value E = A--Ao, equal to the difference between the reading of the measuring device (A) and the true value of the measured quantity (Ao), is called the absolute measurement error. It is measured in the same units as the measured quantity itself.

In practice, it is often convenient to use not absolute, but relative error. The relative measurement error is of two types - real and reduced. The actual relative error is the ratio absolute error to the true value of the measured quantity:

A D =---------* 100%

The given relative error is the ratio of the absolute error to the maximum possible meaning measured quantity:

Up =----------* 100%

Systematic and random errors.

Systematic is an error whose value does not change from measurement to measurement. Due to this feature, systematic error can often be predicted in advance or, in extreme cases, detected and eliminated at the end of the measurement process.

The method for eliminating systematic error depends primarily on its nature. Systematic measurement errors can be divided into three groups:

errors of known origin and known magnitude;

errors of known origin but unknown magnitude;

errors of unknown origin and unknown magnitude. The most harmless are the errors of the first group. They are easily removed

by introducing appropriate corrections to the measurement result.

The second group includes, first of all, errors associated with the imperfection of the measurement method and measuring equipment. For example, the error in measuring physical performance using a mask for collecting exhaled air: the mask makes breathing difficult, and the athlete naturally demonstrates physical performance that is underestimated compared to the true one measured without a mask. The magnitude of this error cannot be predicted in advance: it depends on the individual abilities of the athlete and his state of health at the time of the study.

Another example of a systematic error in this group is an error associated with imperfect equipment, when a measuring device knowingly overestimates or underestimates the true value of the measured value, but the magnitude of the error is unknown.

Errors of the third group are the most dangerous; their occurrence is associated both with the imperfection of the measurement method and with the characteristics of the object of measurement - the athlete.

Random errors arise under the influence of various factors that cannot be predicted in advance or accurately taken into account. Random errors cannot be eliminated in principle. However, using the methods mathematical statistics, it is possible to estimate the magnitude of the random error and take it into account when interpreting the measurement results. Without statistical processing, measurement results cannot be considered reliable.

A measurement or test performed to determine the condition or ability of an athlete is called test. Not all measurements can be used as tests, but only those that meet special requirements: standardization, the presence of a rating system, reliability, information content, objectivity. Tests that meet the requirements of reliability, information content and objectivity are called solid.

The testing process is called testing, and the resulting numerical values are test result.

Tests based on motor tasks are called motor or motor. Depending on the task facing the subject, three groups of motor tests are distinguished.

Types of motor tests

|

Test name |

Task for the athlete |

Test result | |

|

Control exercise |

Motor achievements |

1500m run time |

|

|

Standard functional tests |

The same for everyone, dosed: 1) according to the amount of work performed; 2) by the magnitude of physiological changes |

Physiological or biochemical indicators during standard work Motor indicators during a standard amount of physiological changes |

Heart rate registration during standard work 1000 kgm/min Running speed at heart rate 160 beats/min |

|

Maximum functional tests |

Show maximum result |

Physiological or biochemical indicators |

Determination of maximum oxygen debt or maximum oxygen consumption |

Sometimes not one, but several tests are used that have a common final goal. This group of tests is called battery of tests.

It is known that even with the most strict standardization and precise equipment, test results always vary somewhat. Therefore, one of the important conditions for selecting good tests is their reliability.

Reliability of the test is the degree of agreement between results when the same people are repeatedly tested under the same conditions. There are four main reasons causing intra-individual or intra-group variation in test results:

change in the condition of the subjects (fatigue, change in motivation, etc.); uncontrolled changes external conditions and equipment;

change in the state of the person conducting or evaluating the test (well-being, change of experimenter, etc.);

imperfection of the test (for example, obviously imperfect and unreliable tests - free throws into a basketball basket before the first miss, etc.).

The reliability criterion for the test can be reliability factor, calculated as the ratio of the true dispersion to the dispersion recorded in the experiment: r = true s 2 / recorded s 2, where the true value is understood as the dispersion obtained at infinite large number observations under the same conditions; the variance recorded is derived from experimental studies. In other words, the reliability coefficient is simply the proportion of true variation in the variation that is recorded in experiment.

In addition to this coefficient, they also use reliability index, which is considered as a theoretical coefficient of correlation or relationship between the recorded and true values of the same test. This method is most common as a criterion for assessing the quality (reliability) of a test.

One of the characteristics of test reliability is its equivalence, which reflects the degree of agreement between the results of testing the same quality (for example, physical) by different tests. The attitude towards test equivalence depends on the specific task. On the one hand, if two or more tests are equivalent, their combined use increases the reliability of the estimates; on the other hand, it seems possible to use only one equivalent test, which will simplify testing.

If all the tests included in a battery of tests are highly equivalent, they are called homogeneous(for example, to assess the quality of jumping ability, it must be assumed that long jumps, high jumps, and triple jumps will be homogeneous). On the contrary, if there are no equivalent tests in the complex (such as for assessing general physical fitness), then all the tests included in it measure different properties, i.e. essentially the complex is heterogeneous.

The reliability of tests can be increased to a certain extent by:

more stringent standardization of testing;

increasing the number of attempts;

increasing the number of evaluators and increasing the consistency of their opinions;

increasing the number of equivalent tests;

better motivation of subjects.

Test objectivity There is special case reliability, i.e. independence of test results from the person conducting the test.

Information content of the test– this is the degree of accuracy with which it measures the property (the quality of the athlete) that it is used to evaluate. In different cases, the same tests may have different information content. The question of the informativeness of the test breaks down into two specific questions:

What changes this test? How exactly does it measure?

For example, is it possible to use an indicator such as MPC to judge the preparedness of long distance runners, and if so, with what degree of accuracy? Can this test be used in the control process?

If the test is used to determine the condition of the athlete at the time of examination, then they speak of diagnostic information content of the test. If, based on the test results, they want to draw a conclusion about the athlete’s possible future performance, they talk about prognostic information content. A test can be diagnostically informative, but not prognostically, and vice versa.

The degree of information content can be characterized quantitatively - based on experimental data (the so-called empirical information content) and qualitatively - based on a meaningful analysis of the situation ( logical information content). Although in practical work, logical or meaningful analysis should always precede mathematical analysis. An indicator of the informativeness of a test is the correlation coefficient calculated for the dependence of the criterion on the result in the test, and vice versa (the criterion is taken to be an indicator that obviously reflects the property that is going to be measured using the test).

In cases where the information content of any test is insufficient, a battery of tests is used. However, the latter, even with high separate information content criteria (judging by the correlation coefficients), does not allow us to obtain a single number. Here a more complex method of mathematical statistics can come to the rescue - factor analysis. Which allows you to determine how many and which tests work together on a separate factor and what is the degree of their contribution to each factor. It is then easy to select tests (or combinations thereof) that most accurately assess individual factors.

|

1 What is a test called? | |

|

2 What is testing? |

Quantifying a quality or condition of an athlete A measurement or test conducted to determine the condition or ability of an athlete Testing process that quantitatively evaluates a quality or condition of an athlete No definition needed |

|

3 What is the test result called? |

Quantifying a quality or condition of an athlete A measurement or test conducted to determine the condition or ability of an athlete Testing process that quantitatively evaluates a quality or condition of an athlete No definition needed |

|

4 What type of tests is this? 100m run? | |

|

5 What type of tests is this? hand dynamometry? |

Control exercise Functional testMaximum functional test |

|

6 What type of tests does the sample belong to? IPC? |

Control exercise Functional testMaximum functional test |

|

7 What type of tests is this? three-minute run with a metronome? |

Control exercise Functional testMaximum functional test |

|

8 What type of tests is this? maximum number of pull-ups on the bar? |

Control exercise Functional testMaximum functional test |

|

9 In what cases is a test considered informative? | |

|

10 When is a test considered reliable? |

The ability of the test to be reproducible when tested again The ability of the test to measure the athlete quality of interest The independence of the test results from the person administering the test |

|

11 In what case is the test considered objective? |

The ability of the test to be reproducible when tested again The ability of the test to measure the athlete quality of interest The independence of the test results from the person administering the test |

|

12 What criterion is necessary when evaluating a test for information content? | |

|

13 What criterion is needed when evaluating a reliability test? |

Student's T test Fisher's F test Correlation coefficient Coefficient of determination Dispersion |

|

14 What criterion is needed when evaluating an objectivity test? |

Student's T test Fisher's F test Correlation coefficient Coefficient of determination Dispersion |

|

15 What is the information content of a test called if it is used to assess the degree of fitness of an athlete? | |

|

16 What information content of control exercises is the coach guided by when selecting children for his sports section? |

Logical Predictive Empirical Diagnostic |

|

17 Is correlation analysis necessary to assess the information content of tests? | |

|

18 Is factor analysis necessary to assess the information content of tests? | |

|

19 Is it possible to assess the reliability of a test using correlation analysis? | |

|

20 Is it possible to assess the objectivity of a test using correlation analysis? | |

|

21 Will tests designed to assess general physical fitness be equivalent? | |

|

22 When measuring the same quality with different tests, tests are used... |

Designed to measure the same quality Having a high correlation between each other Having a low correlation between each other |

FUNDAMENTALS OF VALUATION THEORY

To evaluate sports results, special points tables are often used. The purpose of such tables is to convert the shown sports result (expressed in objective measures) into conditional points. The law of converting sports results into points is called rating scale. The scale can be specified as a mathematical expression, table or graph. There are 4 main types of scales used in sports and physical education.

Proportional scales

Regressing scales

Progressive scales.

Proportional scales suggest the awarding of the same number of points for an equal increase in results (for example, for every 0.1 s of improvement in the result in the 100 m run, 20 points are awarded). Such scales are used in modern pentathlon, speed skating, cross-country skiing, Nordic combined, biathlon and other sports.

Regressing scales suggest that for the same increase in results as sporting achievements increase, an increasingly smaller number of points are awarded (for example, for an improvement in the result in the 100 m run from 15.0 to 14.9 s, 20 points are added, and for 0.1 s in the range 10.0-9.9 s – only 15 points).

Progressive scales. Here, the higher the sports result, the greater the increase in points for its improvement (for example, for an improvement in running time from 15.0 to 14.9 s, 10 points are added, and from 10.0 to 9.9 s - 100 points). Progressive scales are used in swimming, certain types of athletics, and weightlifting.

Sigmoid scales are rarely used in sports, but are widely used in assessing physical fitness (for example, this is what the scale of physical fitness standards for the US population looks like). In these scales, improvements in results in the zone of very low and very high achievements are sparingly rewarded; The increase in results in the middle achievement zone brings the most points.

The main objectives of assessment are:

compare different achievements in the same task;

compare achievements in different tasks;

define standards.

The norm in sports metrology, the limit value of the result is called, which serves as the basis for assigning an athlete to one of the classification groups. There are three types of norms: comparative, individual, due.

Comparative standards are based on a comparison of people belonging to the same population. For example, dividing people into subgroups according to the degree of resistance (high, medium, low) or reactivity (hyperreactive, normoreactive, hyporeactive) to hypoxia.

Different gradations of assessments and norms

|

Percentage of subjects |

Norms in scales |

||||||||

|

Verbal |

in points |

Percentile |

|||||||

|

Very low |

Below M - 2 | ||||||||

|

From M - 2 to M - 1 | |||||||||

|

Below average |

From M-1 to M–0.5 | ||||||||

|

From M–0.5 to M+0.5 | |||||||||

|

Above average |

From M+0.5 to M+1 | ||||||||

|

From M+1 to M+2 | |||||||||

|

Very high |

Above M+2 | ||||||||

These norms characterize only the comparative successes of subjects in a given population, but do not say anything about the population as a whole (or on average). Therefore, comparative norms must be compared with data obtained from other populations and used in combination with individual and appropriate norms.

Individual norms are based on comparing the performance of the same athlete in different conditions. For example, in many sports there is no relationship between one’s own body weight and athletic performance. Each athlete has an individually optimal weight corresponding to their state of athletic fitness. This norm can be controlled at different stages of sports training.

Due standards are based on an analysis of what a person must be able to do in order to successfully cope with the tasks that life puts before him. An example of this can be the standards of individual physical training complexes, the proper values of vital capacity, basal metabolic rate, body weight and height, etc.

|

1 Is it possible to directly measure the quality of endurance? | |

|

2 Is it possible to directly measure the quality of speed? | |

|

3 Is it possible to directly measure the quality of dexterity? | |

|

4 Is it possible to directly measure the quality of flexibility? | |

|

5 Is it possible to directly measure the strength of individual muscles? | |

|

6 Can the assessment be expressed in a qualitative characteristic (good, satisfactory, bad, pass, etc.)? | |

|

7 Is there a difference between a measurement scale and a rating scale? | |

|

8 What is a rating scale? |

System for measuring sports results The law of converting sports results into points System for evaluating norms |

|

9 The scale assumes the awarding of the same number of points for an equal increase in results. This … | |

|

10 For the same increase in results, fewer and fewer points are awarded as sporting achievements increase. This … |

Progressive scale Regressive scale Proportional scale Sigmoid scale |

|

11 The higher the sports result, the greater the increase in points, the improvement is assessed. This … |

Progressive scale Regressive scale Proportional scale Sigmoid scale |

|

12 Improvement in performance in the very low and very high achievement zones is rewarded sparingly; The increase in results in the middle achievement zone brings the most points. This … |

Progressive scale Regressive scale Proportional scale Sigmoid scale |

|

13 Norms based on the comparison of people belonging to the same population are called... | |

|

14 Standards based on comparison of the performance of the same athlete in different states, are called... |

Individual standards Due standards Comparative standards |

|

15 Norms based on an analysis of what a person should be able to do in order to cope with the tasks assigned to him are called ... |

Individual standards Due standards Comparative standards |

BASIC CONCEPTS OF QUALIMETRY

Qualimetry(Latin qualitas - quality, metron - measure) studies and develops quantitative methods for assessing qualitative characteristics.

Qualimetry is based on several starting points:

Any quality can be measured;

Quality depends on a number of properties that form the “quality tree” (for example, the quality tree of exercise performance in figure skating consists of three levels - highest, middle, lowest);

Each property is determined by two numbers: relative indicator and weight; the sum of the property weights at each level is equal to one (or 100%).

Methodological techniques of qualimetry are divided into two groups:

Heuristic (intuitive), based on expert assessments and questionnaires;

Instrumental.

Expert is an assessment obtained by seeking the opinions of experts. Typical examples of expertise: judging in gymnastics and figure skating, competition for the best scientific work etc.

Carrying out an examination includes the following main stages: forming its purpose, selecting experts, choosing a methodology, conducting a survey and processing the information received, including assessing the consistency of individual expert assessments. During examination great value has a degree of agreement between expert opinions, assessed by the value rank correlation coefficient(in case of several experts). It should be noted that rank correlation underlies the solution of many qualimetry problems, since it allows mathematical calculations with qualitative characteristics.

In practice, an indicator of an expert's qualifications is often the deviation of his ratings from the average ratings of a group of experts.

Questionnaire is a method of collecting opinions by filling out questionnaires. Questionnaires, along with interviews and conversations, are survey methods. Unlike interviews and conversations, questioning involves written responses from the person filling out the questionnaire—the respondent—to a system of standardized questions. It allows you to study motives of behavior, intentions, opinions, etc.

Using questionnaires, you can solve many practical problems in sports: assessing the psychological status of an athlete; his attitude to the nature and direction of training sessions; interpersonal relationships in the team; own assessment of technical and tactical readiness; dietary assessment and many others.

|

1 What does qualimetry study? |

Studying the quality of tests Studying the qualitative properties of a trait Studying and developing quantitative methods for assessing quality |

|

2 Mathematical methods, used in qualimetry? |

Pair correlation Rank correlation Analysis of variance |

|

3 What methods are used to assess the level of performance? | |

|

4 What methods are used to assess diversity? technical elements? |

Questionnaire method Expert assessment method Method not specified |

|

5 What methods are used to assess the complexity of technical elements? |

Questionnaire method Expert assessment method Method not specified |

|

6 What methods are used to evaluate psychological state athlete? |

Questionnaire method Expert assessment method Method not specified |

CHAPTER 3. STATISTICAL PROCESSING OF TEST RESULTS

Statistical processing of test results allows, on the one hand, to objectively determine the results of the subjects, on the other hand, to assess the quality of the test itself, test tasks, in particular to evaluate its reliability. The problem of reliability has received a lot of attention in classical test theory. This theory has not lost its relevance today. Despite the appearance, more modern theories, the classical theory continues to maintain its position.

3.1. BASIC PROVISIONS OF CLASSICAL TEST THEORY

3.2. TEST RESULTS MATRIX

3.3. GRAPHICAL REPRESENTATION OF TEST SCORE

3.4. MEASURES OF CENTRAL TENDENCY

3.5. NORMAL DISTRIBUTION

3.6. VARIATION OF TEST SCORES OF SUBJECTS

3.7. CORRELATION MATRIX

3.8. TEST RELIABILITY

3.9. TEST VALIDITY

LITERATURE

BASIC PROVISIONS OF CLASSICAL TEST THEORY

The creator of the Classical Theory of mental tests is the famous British psychologist, author of factor analysis, Charles Edward Spearman (1863-1945) 1. He was born on September 10, 1863, and served in the British Army for a quarter of his life. For this reason, he received his Ph.D. degree only at the age of 41 2 . Charles Spearman carried out his dissertation research at the Leipzig Laboratory of Experimental Psychology under the direction of Wilhelm Wundt. At that time, Charles Spearman was strongly influenced by the work of Francis Galton on testing human intelligence. Charles Spearman's students were R. Cattell and D. Wechsler. Among his followers are A. Anastasi, J. P. Guilford, P. Vernon, C. Burt, A. Jensen.

Lewis Guttman (1916-1987) made a major contribution to the development of classical test theory.

The classical test theory was first presented comprehensively and completely in the fundamental work of Harold Gulliksen (Gulliksen H., 1950) 4 . Since then, the theory has been somewhat modified, in particular, the mathematical apparatus has been improved. Classical test theory in a modern presentation is given in the book Crocker L., Aligna J. (1986) 5. Among domestic researchers, V. Avanesov (1989) 6 was the first to describe this theory. In the work of Chelyshkova M.B. (2002) 7 provides information on the statistical justification of the quality of the test.

Classical test theory is based on the following five basic principles.

1. The empirically obtained measurement result (X) is the sum of the true measurement result (T) and the measurement error (E) 8:

X = T + E (3.1.1)

The values of T and E are usually unknown.

2. The true measurement result can be expressed as the mathematical expectation E(X):

3. The correlation of true and false components across the set of subjects is zero, that is, ρ TE = 0.

4. The erroneous components of any two tests do not correlate:

5. The erroneous components of one test do not correlate with the true components of any other test:

In addition, the basis of classical test theory is formed by two definitions - parallel and equivalent tests.

PARALLEL tests must meet the requirements (1-5), the true components of one test (T 1) must be equal to the true components of the other test (T 2) in each sample of subjects answering both tests. It is assumed that T 1 =T 2 and, in addition, are equal to the variance s 1 2 = s 2 2.

Equivalent tests must meet all the requirements of parallel tests with one exception: the true components of one test do not have to be equal to the true components of another parallel test, but they must differ by the same constant With.

The condition for the equivalence of two tests is written in the following form:

where c 12 is the constant between the results of the first and second tests.

Based on the above provisions, a theory of test reliability has been constructed 9,10.

that is, the variance of the resulting test scores is equal to the sum of the variances of the true and error components.

Let's rewrite this expression as follows:

(3.1.3)

(3.1.3)

The right side of this equality represents the reliability of the test ( r). Thus, the reliability of the test can be written as:

Based on this formula, various expressions were subsequently proposed for finding the test reliability coefficient. The reliability of a test is its the most important characteristic. If reliability is unknown, test results cannot be interpreted. The reliability of a test characterizes its accuracy as a measuring instrument. High reliability means high repeatability of test results under the same conditions.

In classical test theory the most important problem is to determine the subject's true test score (T). Empirical test score(X) depends on many conditions - the level of difficulty of the tasks, the level of preparedness of the test takers, the number of tasks, testing conditions, etc. In a group of strong, well-prepared subjects, test results will usually be better. than in a group of poorly trained subjects. In this regard, the question remains open about the size of the measure of difficulty of tasks on population subjects. The problem is that real empirical data are obtained from completely random samples of subjects. Typically this is study groups, representing a multitude of students who interact quite strongly with each other in the learning process and study in conditions that are often not repeated for other groups.

We'll find s E from equation (3.1.4)

![]()

Here the dependence of the measurement accuracy on the standard deviation is explicitly shown s X and on the reliability of the test r.

basics of test theory

Basic concepts of test theory

A measurement or test performed to determine the condition or ability of an athlete is called test .

Not all measurements can be used as tests, but only those that meet special requirements. These include:

1. standardization (the testing procedure and conditions must be the same in all cases of using the test);

2. reliability;

3. information content;

4. Availability of a rating system.

Tests that meet the requirements of reliability and information content are called solid or authentic (Greek authentico - in a reliable manner).

The testing process is called testing ; resulting measurement numeric value - test result (or test result). For example, the 100 m run is a test, the procedure for conducting races and timing is testing, and the time of the race is the test result.

Tests based on motor tasks are called motor or motor . Their results can be either motor achievements (time to complete the distance, number of repetitions, distance traveled, etc.), or physiological and biochemical indicators.

Sometimes not one, but several tests are used that have a single final goal (for example, assessing the athlete’s condition during the competitive training period). This group of tests is called complex or battery of tests .

The same test, applied to the same subjects, should give identical results under the same conditions (unless the subjects themselves have changed). However, even with the most stringent standardization and precise equipment, test results always vary somewhat. For example, a subject who has just shown a result of 215 kg in a deadlift dynamometry test, shows only 190 kg when repeated.

2. Test reliability and ways to determine it

Reliability test is the degree of agreement between results when repeated testing of the same people (or other objects) under the same conditions.

Variation in test-retest results is called within-individual, or within-group, or within-class.

Four main reasons cause this variation:

1. Change in the state of the subjects (fatigue, training, learning, change in motivation, concentration, etc.).

2. Uncontrolled changes in external conditions and equipment (temperature, wind, humidity, power supply voltage, presence of unauthorized persons, etc.), i.e. everything that is united by the term “random measurement error”.

3. Changing the state of the person conducting or evaluating the test (and, of course, replacing one experimenter or judge with another).

4. Imperfection of the test (there are tests that are obviously unreliable. For example, if the subjects are making free throws into a basketball basket, then even a basketball player with a high percentage of hits can accidentally make a mistake on the first throws).

The main difference between test reliability theory and measurement error theory is that in error theory the measured value is assumed to be constant, while in test reliability theory it is assumed that it changes from measurement to measurement. For example, if it is necessary to measure the result of a completed attempt in a running long jump, then it is quite definite and cannot change significantly over time. Of course, due to random reasons (for example, unequal tension of the tape measure), it is impossible to measure this result with ideal accuracy (say, up to 0.0001 mm). However, by using a more precise measuring tool (for example, a laser meter), their accuracy can be increased to the required level. At the same time, if the task is to determine the preparedness of a jumper at individual stages of the annual training cycle, then the most accurate measurement of the results shown by him will be of little help: after all, they will change from attempt to attempt.

To understand the idea of the methods used to judge the reliability of tests, let's look at a simplified example. Let's assume that it is necessary to compare the standing long jump results of two athletes based on two attempts made. Let us assume that the results of each of the athletes vary within ± 10 cm from average size and are equal to 230 ± 10 cm (i.e. 220 and 240 cm) and 280 ± 10 cm (i.e. 270 and 290 cm), respectively. In this case, the conclusion, of course, will be completely unambiguous: the second athlete is superior to the first (differences between the averages of 50 cm are clearly higher than random fluctuations of ± 10 cm). If, with the same intragroup variation (± 10 cm), the difference between the average values of the subjects (intergroup variation) is small, then it will be much more difficult to draw a conclusion. Let's assume that the average values will be approximately 220 cm (in one attempt - 210, in the other - 230 cm) and 222 cm (212 and 232 cm). In this case, the first subject in the first attempt jumps 230 cm, and the second - only 212 cm; and it seems that the first is significantly stronger than the second. From this example it is clear that the main significance is not intraclass variability itself, but its relationship with interclass differences. The same intraclass variability gives different reliability with equal differences between classes (in the particular case between the studied ones, Fig. 14).

Rice. 14. The ratio of inter- and intraclass variation with high (top) and low (bottom) reliability:

short vertical strokes - data from individual attempts;

Average results of three subjects.

The theory of test reliability is based on the fact that the result of any measurement carried out on a person is the sum of two values:

where: - the so-called true result that they want to record;

Error caused by uncontrolled changes in the state of the subject and random measurement errors.

The true result is understood as the average value of x for an infinitely large number of observations under the same conditions (for this reason, the sign is put at x).

If the errors are random (their sum is zero, and in equal attempts they do not depend on each other), then from mathematical statistics it follows:

those. The variance of the results recorded in the experiment is equal to the sum of the variances of the true results and errors.

Reliability factor is called the ratio of the true dispersion to the dispersion recorded in the experiment:

In addition to the reliability coefficient, they also use reliability index:

which is considered as a theoretical correlation coefficient between the recorded test values and the true ones.

The concept of a true test result is an abstraction (it cannot be measured experimentally). Therefore, we have to use indirect methods. The most preferable method for assessing reliability is analysis of variance followed by calculation of intraclass correlation coefficients. Analysis of variance allows us to decompose the experimentally recorded variation in test results into components due to the influence of individual factors. For example, if you register the results of the subjects in some test, repeating this test in different days, and make several attempts every day, periodically changing experimenters, then variations will occur:

a) from subject to subject;

b) from day to day;

c) from experimenter to experimenter;

d) from attempt to attempt.

Analysis of variance makes it possible to isolate and evaluate these variations.

Thus, in order to assess the practical reliability of the test, it is necessary, firstly, to perform an analysis of variance, and secondly, to calculate the intraclass correlation coefficient (reliability coefficient).

With two attempts, the value of the intraclass correlation coefficient practically coincides with the values of the usual correlation coefficient between the results of the first and second attempts. Therefore, in such situations, the usual correlation coefficient can be used to assess reliability (it estimates the reliability of one rather than two attempts).

Speaking about the reliability of tests, it is necessary to distinguish between their stability (reproducibility), consistency, and equivalence.

Under stability test understand the reproducibility of results when repeated after a certain time under the same conditions. Retesting is usually called retest.

Consistency The test is characterized by the independence of the test results from the personal qualities of the person conducting or evaluating the test.

When selecting a test from a certain number of similar tests (for example, sprinting at 30, 60 and 100 m), the degree of agreement of the results is assessed using the parallel forms method. The correlation coefficient calculated between the results is called equivalence coefficient.

If all the tests included in a test suite are highly equivalent, it is called homogeneous. This entire complex measures one particular property of human motor skills (for example, a complex consisting of standing long, up and triple jumps; the level of development of speed-strength qualities is assessed). If there are no equivalent tests in the complex, then the tests included in it measure different properties, then it is called heterogeneous (for example, a complex consisting of deadlift dynamometry, Abalakov jump, 100 m run).

The reliability of tests can be increased to a certain extent by:

a) more stringent standardization of testing;

b) increasing the number of attempts;

c) increasing the number of evaluators (judges, experiments) and increasing the consistency of their opinions;

d) increasing the number of equivalent tests;

d) better motivation researched.

Example 10.1.

To determine the reliability of the standing triple jump results in assessing the speed-strength capabilities of sprinters, if the sample data are as follows:

Solution:

1. Enter the test results into the worksheet:

2. Substitute the results obtained into the formula for calculating the rank correlation coefficient:

3. Determine the number of degrees of freedom using the formula:

Conclusion: the calculated value obtained Therefore, with confidence in 99% we can say that the standing triple jump test is reliable.

Fundamentals of test theory 1. Basic concepts of test theory 2. Test reliability and ways to determine it

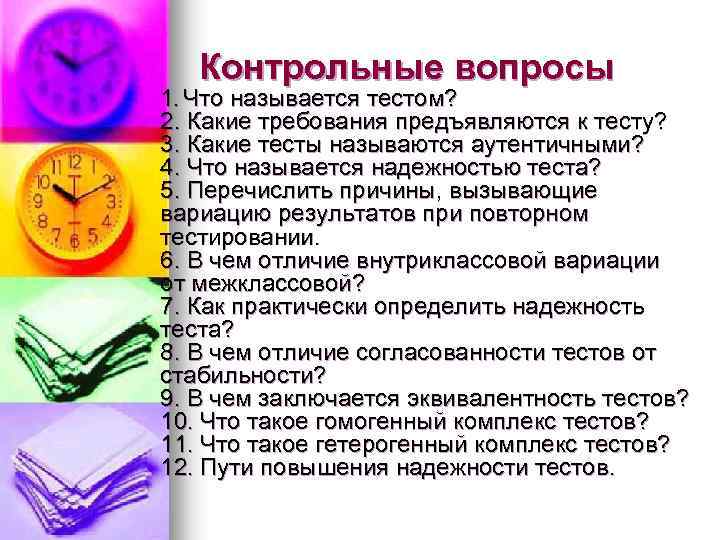

Security questions 1. What is the test called? 2. What are the requirements for the test? 3. What tests are called authentic? 4. What is the reliability of a test? 5. List the reasons that cause variation in results during repeated testing. 6. How does intraclass variation differ from interclass variation? 7. How to practically determine the reliability of a test? 8. What is the difference between test consistency and stability? 9. What is the equivalence of tests? 10. What is a homogeneous set of tests? 11. What is a heterogeneous set of tests? 12. Ways to improve the reliability of tests.

Security questions 1. What is the test called? 2. What are the requirements for the test? 3. What tests are called authentic? 4. What is the reliability of a test? 5. List the reasons that cause variation in results during repeated testing. 6. How does intraclass variation differ from interclass variation? 7. How to practically determine the reliability of a test? 8. What is the difference between test consistency and stability? 9. What is the equivalence of tests? 10. What is a homogeneous set of tests? 11. What is a heterogeneous set of tests? 12. Ways to improve the reliability of tests.

A test is a measurement or test carried out to determine a person's condition or ability. Not all measurements can be used as tests, but only those that meet special requirements. These include: 1. standardization (the testing procedure and conditions must be the same in all cases of using the test); 2. reliability; 3. information content; 4. Availability of a rating system.

A test is a measurement or test carried out to determine a person's condition or ability. Not all measurements can be used as tests, but only those that meet special requirements. These include: 1. standardization (the testing procedure and conditions must be the same in all cases of using the test); 2. reliability; 3. information content; 4. Availability of a rating system.

Test requirements: n Information content - the degree of accuracy with which it measures the property (quality, ability, characteristic) for which it is used to evaluate. n Reliability is the degree to which results are consistent when the same people are tested repeatedly under the same conditions. Consistency - ( different people, but the same devices and the same conditions). n n Standardity of conditions - (same conditions for repeated measurements). n Availability of a grading system - (translation into a grading system. Like in school 5 -4 -3...).

Test requirements: n Information content - the degree of accuracy with which it measures the property (quality, ability, characteristic) for which it is used to evaluate. n Reliability is the degree to which results are consistent when the same people are tested repeatedly under the same conditions. Consistency - ( different people, but the same devices and the same conditions). n n Standardity of conditions - (same conditions for repeated measurements). n Availability of a grading system - (translation into a grading system. Like in school 5 -4 -3...).

Tests that meet the requirements of reliability and information content are called sound or authentic (Greek authentiko - in a reliable manner)

Tests that meet the requirements of reliability and information content are called sound or authentic (Greek authentiko - in a reliable manner)

The testing process is called testing; the numerical value obtained as a result of the measurement is the test result (or test result). For example, the 100 m run is a test, the procedure for conducting races and timing is testing, and the time of the race is the test result.

The testing process is called testing; the numerical value obtained as a result of the measurement is the test result (or test result). For example, the 100 m run is a test, the procedure for conducting races and timing is testing, and the time of the race is the test result.

Tests based on motor tasks are called motor or motor tests. Their results can be either motor achievements (time to complete the distance, number of repetitions, distance traveled, etc.), or physiological and biochemical indicators.

Tests based on motor tasks are called motor or motor tests. Their results can be either motor achievements (time to complete the distance, number of repetitions, distance traveled, etc.), or physiological and biochemical indicators.

Sometimes not one, but several tests are used that have a single final goal (for example, assessing the athlete’s condition during the competitive training period). Such a group of tests is called a set or battery of tests.

Sometimes not one, but several tests are used that have a single final goal (for example, assessing the athlete’s condition during the competitive training period). Such a group of tests is called a set or battery of tests.

The same test, applied to the same subjects, should give identical results under the same conditions (unless the subjects themselves have changed). However, even with the most stringent standardization and precise equipment, test results always vary somewhat. For example, a subject who has just shown a result of 215 kG in the deadlift dynamometry test, when repeated, shows only 190 kG.

The same test, applied to the same subjects, should give identical results under the same conditions (unless the subjects themselves have changed). However, even with the most stringent standardization and precise equipment, test results always vary somewhat. For example, a subject who has just shown a result of 215 kG in the deadlift dynamometry test, when repeated, shows only 190 kG.

Reliability of tests and ways to determine it Reliability of a test is the degree of agreement of results when repeated testing of the same people (or other objects) under the same conditions.

Reliability of tests and ways to determine it Reliability of a test is the degree of agreement of results when repeated testing of the same people (or other objects) under the same conditions.

Variation in test-retest results is called within-individual, or within-group, or within-class. Four main reasons cause this variation: 1. Changes in the state of the subjects (fatigue, training, “learning,” changes in motivation, concentration, etc.). 2. Uncontrolled changes in external conditions and equipment (temperature, wind, humidity, voltage in the electrical network, the presence of unauthorized persons, etc.), i.e., everything that is united by the term “random measurement error.”

Variation in test-retest results is called within-individual, or within-group, or within-class. Four main reasons cause this variation: 1. Changes in the state of the subjects (fatigue, training, “learning,” changes in motivation, concentration, etc.). 2. Uncontrolled changes in external conditions and equipment (temperature, wind, humidity, voltage in the electrical network, the presence of unauthorized persons, etc.), i.e., everything that is united by the term “random measurement error.”

Four main reasons cause this variation: 3. A change in the condition of the person administering or scoring the test (and, of course, the replacement of one experimenter or judge by another). 4. Imperfection of the test (there are tests that are obviously unreliable. For example, if the subjects are making free throws into a basketball basket, then even a basketball player with a high percentage of hits can accidentally make a mistake on the first throws).

Four main reasons cause this variation: 3. A change in the condition of the person administering or scoring the test (and, of course, the replacement of one experimenter or judge by another). 4. Imperfection of the test (there are tests that are obviously unreliable. For example, if the subjects are making free throws into a basketball basket, then even a basketball player with a high percentage of hits can accidentally make a mistake on the first throws).

The concept of a true test result is an abstraction (it cannot be measured experimentally). Therefore, we have to use indirect methods. The most preferable method for assessing reliability is analysis of variance followed by calculation of intraclass correlation coefficients. Analysis of variance allows us to decompose the experimentally recorded variation in test results into components due to the influence of individual factors.

The concept of a true test result is an abstraction (it cannot be measured experimentally). Therefore, we have to use indirect methods. The most preferable method for assessing reliability is analysis of variance followed by calculation of intraclass correlation coefficients. Analysis of variance allows us to decompose the experimentally recorded variation in test results into components due to the influence of individual factors.

If we register the results of the subjects in any test, repeating this test on different days, and making several attempts every day, periodically changing experimenters, then variations will occur: a) from subject to subject; n b) from day to day; n c) from experimenter to experimenter; n d) from attempt to attempt. Analysis of variance makes it possible to isolate and evaluate these variations. n

If we register the results of the subjects in any test, repeating this test on different days, and making several attempts every day, periodically changing experimenters, then variations will occur: a) from subject to subject; n b) from day to day; n c) from experimenter to experimenter; n d) from attempt to attempt. Analysis of variance makes it possible to isolate and evaluate these variations. n

Thus, in order to assess the practical reliability of the test, it is necessary, n firstly, to perform an analysis of variance, n secondly, to calculate the intraclass correlation coefficient (reliability coefficient).

Thus, in order to assess the practical reliability of the test, it is necessary, n firstly, to perform an analysis of variance, n secondly, to calculate the intraclass correlation coefficient (reliability coefficient).

Speaking about the reliability of tests, it is necessary to distinguish between their stability (reproducibility), consistency, and equivalence. n n Test stability refers to the reproducibility of results when repeated after a certain time under the same conditions. Repeated testing is usually called a retest. Test consistency is characterized by the independence of test results from the personal qualities of the person administering or evaluating the test.

Speaking about the reliability of tests, it is necessary to distinguish between their stability (reproducibility), consistency, and equivalence. n n Test stability refers to the reproducibility of results when repeated after a certain time under the same conditions. Repeated testing is usually called a retest. Test consistency is characterized by the independence of test results from the personal qualities of the person administering or evaluating the test.

If all the tests included in a test set are highly equivalent, it is called homogeneous. This entire complex measures one property of human motor skills (for example, a complex consisting of standing long, up and triple jumps; the level of development of speed-strength qualities is assessed). If there are no equivalent tests in the complex, that is, the tests included in it measure different properties, then it is called heterogeneous (for example, a complex consisting of deadlift dynamometry, Abalakov jump, 100 m run).

If all the tests included in a test set are highly equivalent, it is called homogeneous. This entire complex measures one property of human motor skills (for example, a complex consisting of standing long, up and triple jumps; the level of development of speed-strength qualities is assessed). If there are no equivalent tests in the complex, that is, the tests included in it measure different properties, then it is called heterogeneous (for example, a complex consisting of deadlift dynamometry, Abalakov jump, 100 m run).

Test reliability can be improved to a certain extent by: n n n a) more stringent standardization of testing; b) increasing the number of attempts; c) increasing the number of evaluators (judges, experiments) and increasing the consistency of their opinions; d) increasing the number of equivalent tests; e) better motivation of the subjects.

Test reliability can be improved to a certain extent by: n n n a) more stringent standardization of testing; b) increasing the number of attempts; c) increasing the number of evaluators (judges, experiments) and increasing the consistency of their opinions; d) increasing the number of equivalent tests; e) better motivation of the subjects.

About the company Foreign language courses at Moscow State University

About the company Foreign language courses at Moscow State University Which city and why became the main one in Ancient Mesopotamia?

Which city and why became the main one in Ancient Mesopotamia? Why Bukhsoft Online is better than a regular accounting program!

Why Bukhsoft Online is better than a regular accounting program! Which year is a leap year and how to calculate it

Which year is a leap year and how to calculate it